The group existed on Facebook for more than six years, had thousands of members, and was dedicated to exposing online dating scams. Without any prior warning, Facebook removed the community and simultaneously blocked the personal profiles of its admins without the possibility of recovery. In practice, this means that instead of supporting a volunteer initiative to combat fraud, the platform has effectively given an advantage to scammers by depriving users of a trusted source of verified information and warnings.

The DatingScammer.info group was created with a noble mission – to protect people from scammers operating on dating platforms.

For more than six years, the community has been engaged in important social work:

- Examples of fake profiles on dating sites and tips on recognizing them;

- Information about real people who are engaged in fraud (within the law and platform rules);

- News and analytical articles about various types of fraud on dating sites and apps.

Regular readers included scam victims, OSINT volunteers, and users wanting to learn how to spot suspicious contacts.

How It Happened

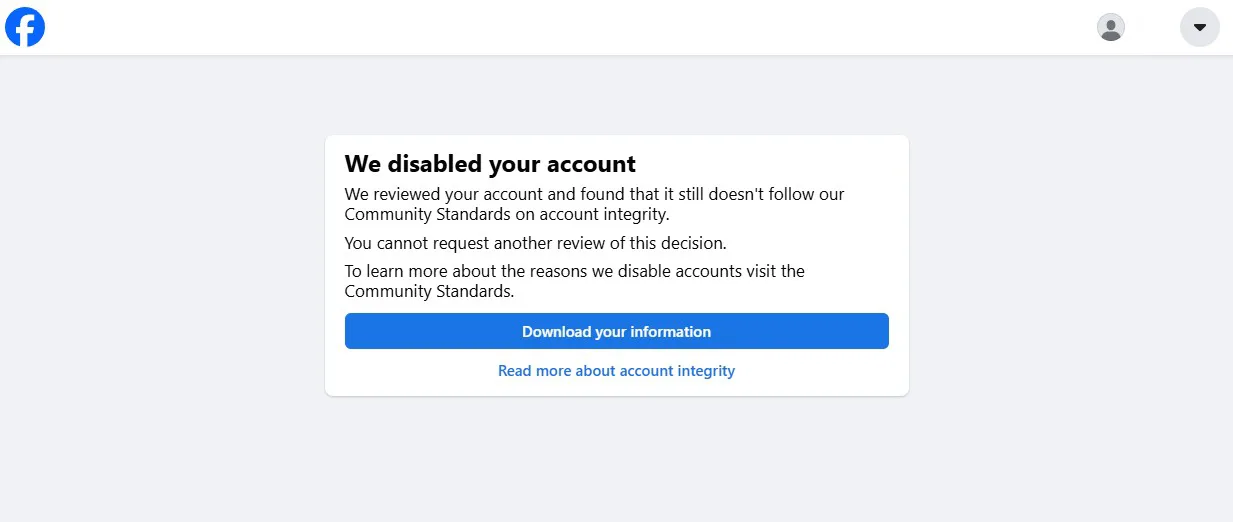

According to our administrators, the group was deleted suddenly, without any prior warnings or “yellow cards.” At the same time, the admins’ personal accounts were blocked without the right to appeal and without explanation. From a user’s perspective, this feels like a complete lack of direct communication with technical support: there is no way to open a proper case, get a transparent breakdown of the violation, or contest the decision in a reasonable timeframe. (While Meta publicly claims to have internal review processes for moderation decisions, in practice their accessibility and effectiveness remain opaque to users.)

Why This Is Dangerous for Legitimate Users

Deleting an anti-fraud community creates an information vacuum. Scammers use social platforms as mass-production factories for making contact with victims: the fewer public countermeasures and exposure posts there are, the easier it is for them to “burn” an account and restart their scheme.

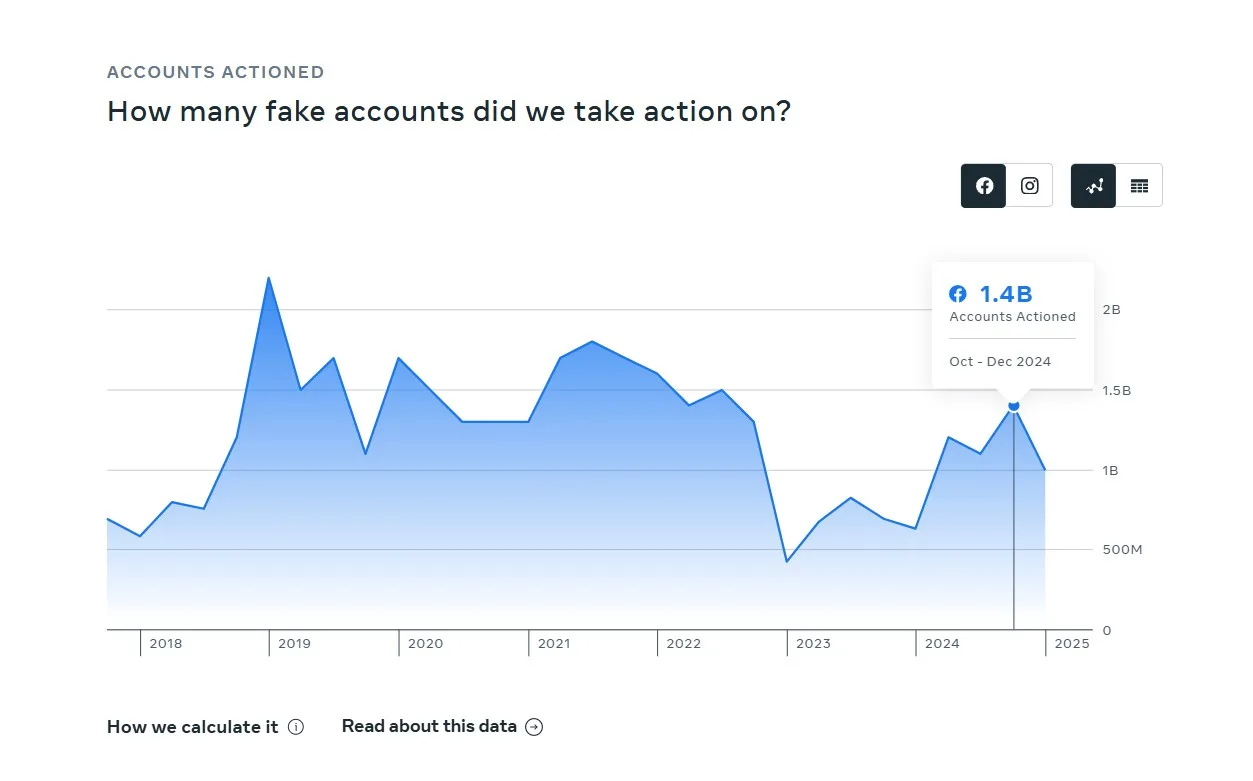

The irony is that Meta’s own reports confirm the enormous scale of the fake account problem. According to its official transparency center, in Q1 2025 the prevalence of fake accounts was estimated at about 3% of Facebook’s worldwide MAU (monthly active users). This means millions of active fake accounts interacting with ordinary people every day.

Historically, the company has removed hundreds of millions to billions of fake accounts every quarter. For example, in Q1 2019, Facebook reported removing 2.2 billion fake accounts – a record surge linked to automated attacks.

Even outside “peak” quarters, the numbers are massive: in Q3 2023, Meta reported removing 827 million fake accounts. Yearly totals reach many billions of deactivated registrations.

Independent analysts aggregating Meta’s data estimate that since 2017, the total number of removed fake accounts is in the tens of billions. The exact yearly numbers vary depending on the methodology, but the scale is systemic and long-standing.

Where Moderation Logic Breaks

- Lack of transparency – Users/admins do not know which post or action caused the sanction or how to fix the issue.

- No effective feedback channel – The formal appeal forms often fail to provide a clear case status or review deadline. As a result, legitimate anti-fraud initiatives face harsher risks than serial scammers, for whom account blocks are just the cost of doing business.

- Chilling effect – Seeing what happened to this group, other scam-awareness moderators may self-censor, reducing the amount of valuable preventive information shared.

Why This Looks Like Support for Scammers

By allowing an endless conveyor belt of new fake accounts while shutting down spaces where users learn to identify scams, the platform effectively makes scammers’ work easier. Fighting fraud can’t rely solely on mass automated removals; it requires a full ecosystem – public education, targeted case analysis, and the ability to quickly escalate erroneous moderation.

What the Platform Could and Should Do

- Ensure transparency of moderation decisions: provide a clear case ID, the exact rule violated, and a workable path to correction.

- Create a fast-track appeal channel for anti-fraud communities and investigations of public interest.

- Whitelist trusted initiatives with higher trust scores and direct support contacts.

- Align policy with transparency reports: if millions of fake accounts are officially acknowledged, moderation should not punish those who help users recognize them.

The removal of the group datingscammer.info and the banning of its administrators without warning is a striking example of how well-intentioned automated moderation can backfire. When a platform deletes billions of fake accounts annually yet eliminates independent sources of education and prevention, the winners are not honest users but those who profit from deception. Facebook needs not only strong algorithms but also accountability for its decisions, transparency in its appeals process, and genuine partnerships with those working to make the internet safer.